Benjamin's Design Study

(→Metadata) |

(→Metadata) |

||

| Line 82: | Line 82: | ||

The solutions to the security problem which have been raised include: | The solutions to the security problem which have been raised include: | ||

# Use multiple inheritance | # Use multiple inheritance | ||

| − | # Have an ACL class and a POSIX class with the | + | # Have an ACL class and a POSIX class with the Node class containing a field for both, which is set to null for files which don't have permissions set with that type of permission model. This solution is not acceptable as then the file class would need to know about permissions and how to handle conflicts between the two. |

# Have a FilePermissions class which can then contain a pointer to a specific ACL or POSIX class (or both). | # Have a FilePermissions class which can then contain a pointer to a specific ACL or POSIX class (or both). | ||

Revision as of 21:23, 29 September 2009

Contents |

Problem

My design study is a continuation of the 425 project from last semester. The project is a generic file system parser which will be able to directly parse any sort of file system from a raw disk and display that information in a consistent manner.

Requirements

- The system must be able to handle any directory hierarchy based file system which is used on modern desktop, laptop, server and portable device operating systems today.

- The system must be able to interpret a raw MBR and determine where the individual partitions are.

Constraints

- The produced model of the hard drive must be completely generic and there must not be any file system specific components to it.

Initial Design

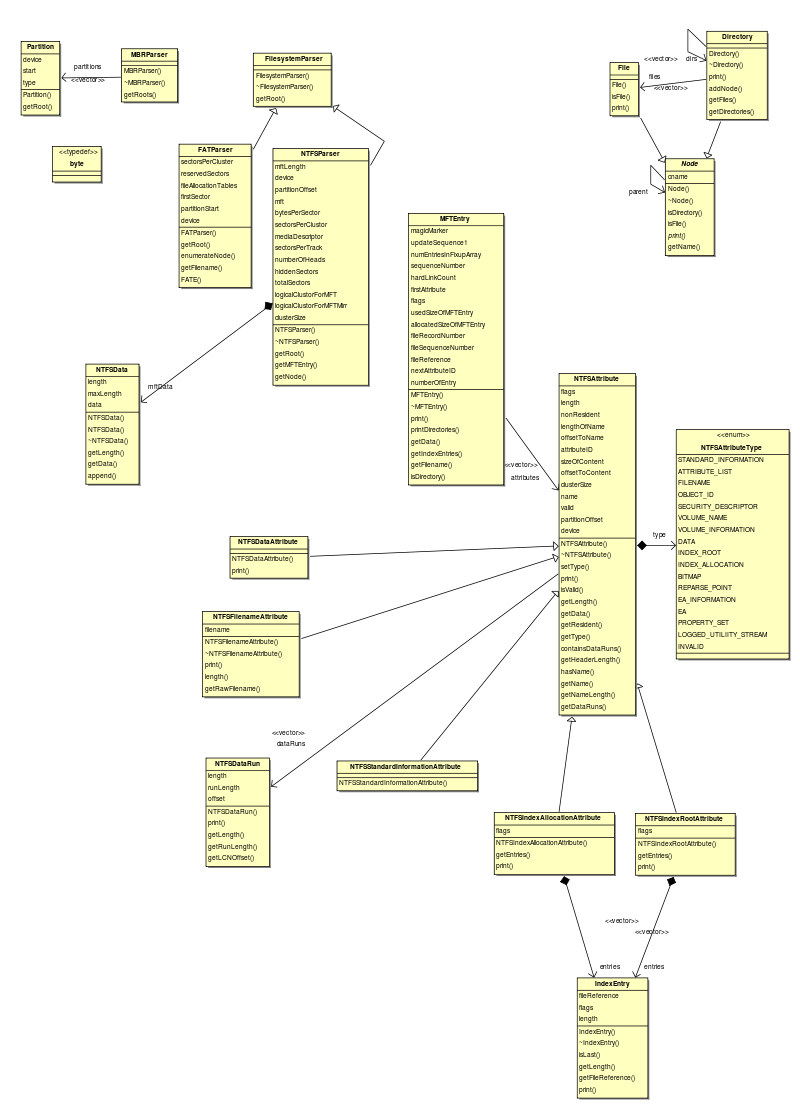

The initial version only has support for parsing NTFS and FAT, although in the future this will be expanded to include HFS+, EXT, ReiserFS, etc. Currently RAID systems are not handled, although this will be important in the future.

For specifics of how the individual parsers work, and why the classes have been named as they are, the reader is referred to the FAT and NTFS specifications.

- Partition: Represents a partition on the hard drive.

- MBRParser: A class which can be used to parse the MBR of a hard drive.

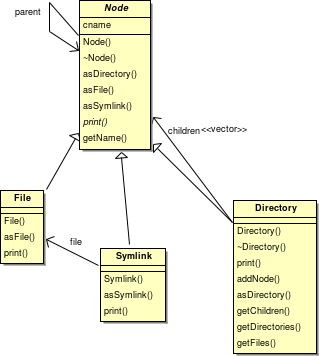

- Node: Represents a node in the file system (which is either a directory or a file).

- Directory: Represents a directory on a given file system.

- File: Represents a file on a given file system.

- FilesystemParser: An abstract class which all File System parsers inherit from.

- FATParser: A class which parses FAT file systems.

- NTFSParser: A class which parses NTFS file systems.

- MFTEntry: Represents an entry in the Master File Table.

- NTFSAttribute: Represents a single attribute for a given MFT entry - an attribute can be one of many types. Each attribute holds different pieces of information about the file or directory represented by the MFT entry.

- NTFSDataRun: In NTFS if the data of an attribute is too big to fit in the MFT entry, then a data run is created somewhere else on the disk. This class is used to represent information about a single data run.

- IndexEntry: Represents a single directory entry (either a file or another directory) in the NTFS file system.

Critisms

Upon initial inspection, it seems as though there are no major design flaws, however a few minor issues have come up:

- Although it's not visible on the diagram, there is a large function which does a type switch based upon the type which has been read from the disk (it's not part of any individual class, so it can't be shown on the UML diagram). Although this technically violates the Beware type switches maxim, because it's creating an instance of the appropriate attribute from data which has been read from disk, I believe there is no other way to do this.

- The fact that an attribute can be one of many types violates the Class hierarchies should be deep and narrow maxim, although again, this is justifiable because of the problem domain (The NTFS file system design).

- Although it's not visible on the diagram, some of the constructs are getting rather large, and should possibly be split up in to separate private methods so that it conforms to the Reduce the size of methods maxim.

- Likewise, the number of arguments needed for each attribute has been growing. Currently each attribute needs five different arguments to be passed in to it, meaning that this should probably be refactored in order to Reduce the number of arguments.

- Again, it's not visible on the diagram, but in some places variables of type char* are being returned, when they should be of type const char* so that I Don't expose mutable attributes.

- Throughout the code a lot of the constants are not named, as the code is designed to be read next to the NTFS spec, and it would hinder understanding of the code if these were placed as constants such as NTFS_ATTRIBUTE_LENGTH_OFFSET or some such similar thing, when the spec gives these in a table and the variable names themselves make it obvious what the constants are used for, so it was a concious decision to break the Named constants maxim.

- The file system structure also has the same problems as Parse tree design. I am currently solving this with the IsDirectory() and IsFile() functions. In its current state, it's obvious that this needs to be cleaned up, because it's kind of using the composite pattern, but also has separate concepts of files and directories within a directory - this should be changed to just a single node - as this will cause problems when I need to add symbolic links (see below).

Future plans

Only one of the critisims given above would require a change to the code which would be visible on the UML diagram (The one to reduce the number of arguments to each NTFS attribute), the rest of the changes are at the code level. Therefore I am going to give a list of future plans for the code, which have not yet been implemented and which I had not thought about when designing the current parser because these are not important given the current goals and priorities of the parser and the code which uses the parser.

- In the future we will need to be able to read from encrypted files.

- We will also need to be able to read compressed files.

- The final representation of the file system will also need to know some metadata about the files, such as creation/modification/access times, security permissions, etc. Metadata relating to the contents of the files will be handled at a higher level - as this code does not actively read the files, but gives their physical location on disk to client classes.

- NTFS and HFS+ both have the concept of Forks (Commonly called an alternative data stream under Windows). These will also need to be represented in the file system hierarchy.

- Symbolic links need to be added.

Solutions

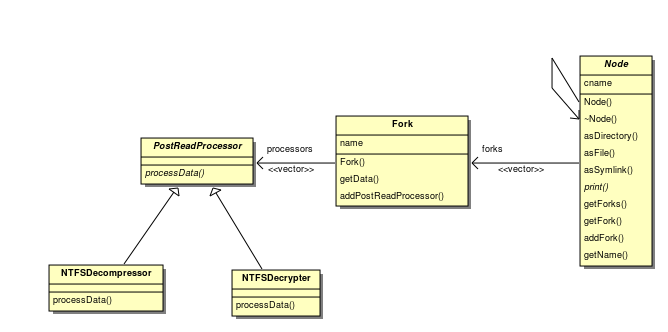

Forks

In NTFS a file's data is stored in a fork. Any node in the NTFS file system can have 0 or more forks. Forks are not unique to NTFS (HFS+ has them too), however they are somewhat rare in other file systems. In order to support forks and allow any file or directory to have a varying number of streams of data, I propose that all nodes should have 0 or more forks, and if the filesystem does not support forks, then directories will have none and the files will have one fork, representing the file's only data stream.

For a UML diagram showing how this fits in, please see the following section.

Compression/Encryption

In the current design, each file doesn't have the ability to actually fetch the file from the hard drive, all it does currently is record the filename. In order to implement both compressed and encrypted files for the file system, I will need to add the ability for the actual data of each file to be retrieved inside the file class. To handle compression and encryption, I propose that I use the strategy pattern, as each filesystem will have its own compression and encryption algorithm (if implemented at all), and a key will need to be suppled for decryption (and of course it doesn't make sense to have the file class know anything about encryption algorithms).

The next issue is do we have a single 'slot' for an encryption algorithm and a single 'slot' for the compression algorithm for each file, only allowing one for each, or do we create the concept of a post-reading processor, and have the file contain a list of algorithms which must be applied to the data after it has been read? I chose to go with the later for two reasons:

- For filesystems which implement both encryption and compression, they might "apply" them in different orders. NTFS might first encrypt the data, then compress it, whereas HFS+ might compress the data first, then encrypt it. Practically it makes sense to compress the data before encrypting it (because almost all modern encryption algorithms will make the data a lot more random and less predictable), however some file systems may apply them in different orders, and in order to make the system most flexible, we should allow for this and it makes sense to have the file system parser itself determine which order post processors should be applied in.

- There may be other post processing which needs to be done which we haven't thought of yet.

Note: It is conceivable that a different algorithm or set of algorithms may need to be applied to an individual fork of data from a given file, rather than all forks in a given file. This is why the algorithms can be different for each fork rather than handling this at the file level.

Metadata

As this is primarily a parser for the current generation hierarchical based file systems (we're not taking in to account anything like tagged based file systems), I decided to do a survey of the current metadata which is stored by the file systems, which we are interested in. The primary data I am currently interested in is:

- Security information (Who owns the file, and who has access to it, and at what levels?)

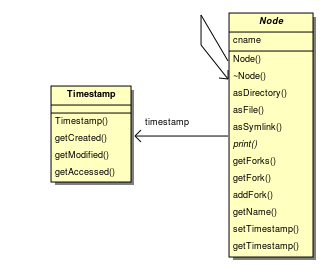

- Creation/Modification/Access times

There are two problems which have risen:

- Unfortunately not all file systems record both of these types of information (FAT doesn't have the concept of users or security), and EXT file systems often have timestamping disabled for performance reasons.

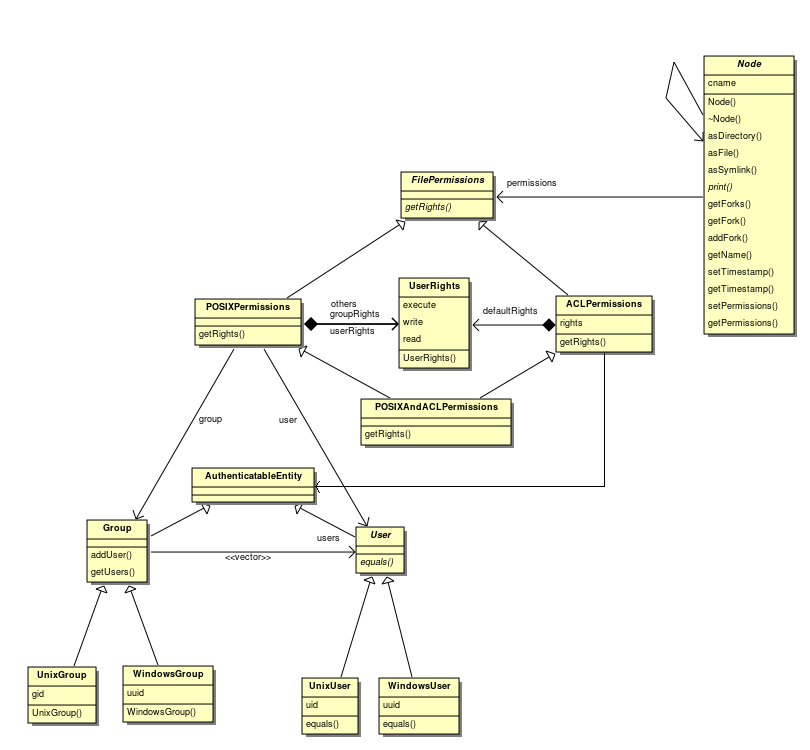

- The file systems store security permissions in vastly different ways. The ones with Unix roots (EXT, ReiserFS, HFS(+)) all have the traditional POSIX file permissions - each file has an owner and a group, and read/write/execute flags are set for the owner, group and others. NTFS only supports Access Control Lists (ACL), which are much more fine grained and contain a list of users/groups and the operations they're allowed to perform. To make this more complicated, the Unix file systems have had ACL based security permissions added in.

Ultimately we want a single method which takes a user and returns what permissions that user has for the node.

The solutions to the security problem which have been raised include:

- Use multiple inheritance

- Have an ACL class and a POSIX class with the Node class containing a field for both, which is set to null for files which don't have permissions set with that type of permission model. This solution is not acceptable as then the file class would need to know about permissions and how to handle conflicts between the two.

- Have a FilePermissions class which can then contain a pointer to a specific ACL or POSIX class (or both).

I believe in this case using Multiple Inheritance is the best option, because to return the list of users (or groups) the class will need access to the data from both the POSIX and ACL, so it makes sense to simply override the "GetUserPermissions" method in the bottom class, rather than giving the knowledge of how to combine the models in other places.

Notes:

- The getRights method of the FilePermissions class takes a User object and returns a UserRights object.

- The ACLPermissions class has a list containing pairs of Authenticatable Entities and their rights (it must be a list, because order is important when deciding what permissions to give).

I think the best option for the time stamps is to have a separate class which stores that information, and set the pointer to that class to null if the filesystem doesn't support timestamps (this situation should hopefully be fairly rare, but is certainly an issue, especially on customised Linux systems).

Too many parameters

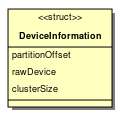

Currently each attribute is passed:

- The offset of the partition from the start of the disk (this needs to be known because any offsets within the attributes are relative to the start of the partition).

- The cluster size

- A file pointer to the device or file which the partition is being read from

- The offset of the attribute from the start of the MFT entry

- The byte stream of the attribute itself.

Only the last two of these are unique for each attribute, the rest are the same for all attributes on a partition. Therefore I propose creating a struct containing the first three of these attributes which can be passed as the first parameter to all attributes. I have chosen to use a struct because this is simply a group of related values rather than an object which represents a concept in the domain.

Symbolic Links

The solution to this seems fairly obvious:

- Fix up the current abstract representation of file systems (using the solutions above).

- Add another type of node (the symbolic link) which points to a file.