User:Jenny Harlow/Design study

Jenny Harlow (Talk | contribs) (Move initial design detail to a subpage) |

Jenny Harlow (Talk | contribs) |

||

| Line 2: | Line 2: | ||

---- | ---- | ||

| − | == Project == | + | ==Project description== |

Designing a simple collections framework | Designing a simple collections framework | ||

| Line 24: | Line 24: | ||

I started my design study by thinking what I would want to change in the Java Collections Framework. See [[User:Jenny Harlow/Design study/Java Collections Framework critique|this page]] for my critique of the Java Collections Framework. | I started my design study by thinking what I would want to change in the Java Collections Framework. See [[User:Jenny Harlow/Design study/Java Collections Framework critique|this page]] for my critique of the Java Collections Framework. | ||

| − | + | ==Design stud == | |

| − | + | ===Scope=== | |

Having decided that the main issue for the developers of the Java Collections Framework is the huge scope of the undertaking, I naturally wanted to avoid as much of that difficulty as I could by limiting the scope of my design project. | Having decided that the main issue for the developers of the Java Collections Framework is the huge scope of the undertaking, I naturally wanted to avoid as much of that difficulty as I could by limiting the scope of my design project. | ||

| Line 34: | Line 34: | ||

I was not aiming to provide data structures for a data processing application where the collection is at the centre of the application, the volume of data to be handled is very very large, and performance is critical. | I was not aiming to provide data structures for a data processing application where the collection is at the centre of the application, the volume of data to be handled is very very large, and performance is critical. | ||

| − | + | ===Data structures and capabilities=== | |

The design should provide a framework of basic structures with limited core functionality ([[#Big picture design decisions|design decisions]]: what are the 'basic structures'? what is the 'core functionality'?). | The design should provide a framework of basic structures with limited core functionality ([[#Big picture design decisions|design decisions]]: what are the 'basic structures'? what is the 'core functionality'?). | ||

| Line 44: | Line 44: | ||

The design was intended to provide an integrated framework so that data could be moved between data structures easily and there was a consistent way of invoking as much common functionality as there really is between types ([[#Big picture design decisions|design decisions]]: what commonality is really there?). | The design was intended to provide an integrated framework so that data could be moved between data structures easily and there was a consistent way of invoking as much common functionality as there really is between types ([[#Big picture design decisions|design decisions]]: what commonality is really there?). | ||

| − | + | ===Contracts=== | |

A major issue for a collections framework is contracts: Collections are for other programmers to use and so using and abiding by [[Design by contract|design by contract]] has to be just as important, if not more so, as conspicuous display of [[Gang of Four 1995|GoF]]-authorised design patterns. Am I claiming the design by contract is more important here than in some of the other projects? No, but I am saying that it is very very relevant when designing a framework whose sole purpose is to provide objects to be used by clients. | A major issue for a collections framework is contracts: Collections are for other programmers to use and so using and abiding by [[Design by contract|design by contract]] has to be just as important, if not more so, as conspicuous display of [[Gang of Four 1995|GoF]]-authorised design patterns. Am I claiming the design by contract is more important here than in some of the other projects? No, but I am saying that it is very very relevant when designing a framework whose sole purpose is to provide objects to be used by clients. | ||

| − | + | === Optional extras === | |

If I have time I am interested in the idea of a performance test suite for collections, running some standard tests and providing a summary of results. | If I have time I am interested in the idea of a performance test suite for collections, running some standard tests and providing a summary of results. | ||

| − | + | == Initial Design == | |

| − | + | ===Overview=== | |

When I started, I was thinking along the lines of simply recreating the structure in the Collections Framework: a List, a Set, a Map, a Queue, a Stack; cutting down what I viewed as almost-duplicated operations or 'wouldn't-it-be-nice-if' optional functionality; cleaning up things like vector and stack ... | When I started, I was thinking along the lines of simply recreating the structure in the Collections Framework: a List, a Set, a Map, a Queue, a Stack; cutting down what I viewed as almost-duplicated operations or 'wouldn't-it-be-nice-if' optional functionality; cleaning up things like vector and stack ... | ||

| Line 74: | Line 74: | ||

| − | + | ===Big picture design decisions=== | |

I decided to try to create a framework based on this 'what matters is behaviour' idea and decided on a 'big picture design' as follows: | I decided to try to create a framework based on this 'what matters is behaviour' idea and decided on a 'big picture design' as follows: | ||

| Line 88: | Line 88: | ||

*Every structure should support iteration. | *Every structure should support iteration. | ||

| − | + | ===Slightly smaller-picture design decisions=== | |

*Initially, I decided not to provide any collection subtype especially for associations: I reasoned that if I provided a pair type then users could use either sequences or bags of pairs as they wanted, using the pair methods to manipulate the objects in the association. However, my purist stance on this did not survive long: | *Initially, I decided not to provide any collection subtype especially for associations: I reasoned that if I provided a pair type then users could use either sequences or bags of pairs as they wanted, using the pair methods to manipulate the objects in the association. However, my purist stance on this did not survive long: | ||

| Line 103: | Line 103: | ||

*In order to be lazy and avoid having to write my own implementations I wanted to use the existing Java Collections Framework to provide implementations so as to be able to demonstrate and test my design. I used the [[Adapter]] pattern to adapt the Collections Framework interfaces to the interfaces in my design (again, see [[User:Jenny Harlow/Design study/Initial design detail|initial design detail]]). | *In order to be lazy and avoid having to write my own implementations I wanted to use the existing Java Collections Framework to provide implementations so as to be able to demonstrate and test my design. I used the [[Adapter]] pattern to adapt the Collections Framework interfaces to the interfaces in my design (again, see [[User:Jenny Harlow/Design study/Initial design detail|initial design detail]]). | ||

| − | + | ===The performance debate=== | |

Initially, as I have discussed, I wanted my design to stand aloof from performance issues. I viewed that as an implementation detail, and if I provided the flexibility to choose an implementation, then those who cared could use/build/beg/borrow/steal an implementation that suited their needs. Whenever data structures are discussed, performance seems to be the big talking point. I suggest that in practice, for most small-scale uses, the choice between HashSet/TreeSet/LinkedHashSet, HashMap/TreeMap or ArrayList/LinkedList will make negligible practical difference because that choice plays only a very small part in the efficiency of the overall application. If you think that performance really does matter, then then making a careful choice of data structure may be wasted if you don't consider the performance of your hash function (hashCode()) in relation to your range of keys, not to mention the effect of correct choice of capacity and load factors... Just choosing a HashSet says very little about what performance you will actually get. When performance really really matters, for large-scale data-processing-focussed applications, then many of the forum discussions seem to end up advocating writing your own hashmaps tailored to your own keys or using specialist data structure libraries. For an interesting discussion of the Java HashSet and its underlying implementation with a HashMap, see this [http://stackoverflow.com/questions/2235546/why-does-hashset-implementation-in-sun-java-use-hashmap-as-its-backing stackoverflow thread]. | Initially, as I have discussed, I wanted my design to stand aloof from performance issues. I viewed that as an implementation detail, and if I provided the flexibility to choose an implementation, then those who cared could use/build/beg/borrow/steal an implementation that suited their needs. Whenever data structures are discussed, performance seems to be the big talking point. I suggest that in practice, for most small-scale uses, the choice between HashSet/TreeSet/LinkedHashSet, HashMap/TreeMap or ArrayList/LinkedList will make negligible practical difference because that choice plays only a very small part in the efficiency of the overall application. If you think that performance really does matter, then then making a careful choice of data structure may be wasted if you don't consider the performance of your hash function (hashCode()) in relation to your range of keys, not to mention the effect of correct choice of capacity and load factors... Just choosing a HashSet says very little about what performance you will actually get. When performance really really matters, for large-scale data-processing-focussed applications, then many of the forum discussions seem to end up advocating writing your own hashmaps tailored to your own keys or using specialist data structure libraries. For an interesting discussion of the Java HashSet and its underlying implementation with a HashMap, see this [http://stackoverflow.com/questions/2235546/why-does-hashset-implementation-in-sun-java-use-hashmap-as-its-backing stackoverflow thread]. | ||

| Line 109: | Line 109: | ||

Since the scope of my project was data structures was for small non-performance critical applications I stuck to this for Bags and only provided a HashTable-based implementation with no tuning operations. However I did decide to provide both a sequential access and a random access implementation for the Sequence. This was partly to test whether my design really supported the plug-and-play dynamically-changeable underlying implementations that I wanted to be able to provide and also because almost everyone I talked to regarded it as a major issue for sequences and who am I to gainsay them? | Since the scope of my project was data structures was for small non-performance critical applications I stuck to this for Bags and only provided a HashTable-based implementation with no tuning operations. However I did decide to provide both a sequential access and a random access implementation for the Sequence. This was partly to test whether my design really supported the plug-and-play dynamically-changeable underlying implementations that I wanted to be able to provide and also because almost everyone I talked to regarded it as a major issue for sequences and who am I to gainsay them? | ||

| − | + | ===Unmodifiability=== | |

I decided not to try to provide the equivalent of the Unmodifiable wrappers in the Java Collections Framework. I thought about this for a long time. My problem is that the Unmodifiable wrappers are the chief perpetrators of evil acts of contract breaking (or - if you prefer the sophistry of - of exploiting 'optional' clauses in contracts) in the Java Collections Framework. There are better ways to do it, but is it worth it? Java say that there are two uses of the unmodifiable wrappers: "to make a collection immutable once it has been built" (make the collection, wrap, destroy the original collection); and "to allow certain clients read-only access to your data structures" - make collection, hand wrapped collection to client, "clients can look but not modify ..." ([http://download.oracle.com/javase/tutorial/collections/implementations/wrapper.html Java Tutorials - Wrapper Implementations]). Well, it all depends on what you mean by 'immutable': the wrapper only prevents the client writing into or removing elements from the collection. Provided that you don't care that - if your objects themselves are not immutable - then your client can do whatever they like to the actual elements in the collection, including setting them all to null, maybe it's great. I did not think that just being able to control changes to the membership of the collection while allowing the client's view to reflect any changes made to the original, but not actually protecting the actual members of the collection from molestation, was a good enough reason to have to make the extra provisions I thought that I would have make to accommodate this in the design. | I decided not to try to provide the equivalent of the Unmodifiable wrappers in the Java Collections Framework. I thought about this for a long time. My problem is that the Unmodifiable wrappers are the chief perpetrators of evil acts of contract breaking (or - if you prefer the sophistry of - of exploiting 'optional' clauses in contracts) in the Java Collections Framework. There are better ways to do it, but is it worth it? Java say that there are two uses of the unmodifiable wrappers: "to make a collection immutable once it has been built" (make the collection, wrap, destroy the original collection); and "to allow certain clients read-only access to your data structures" - make collection, hand wrapped collection to client, "clients can look but not modify ..." ([http://download.oracle.com/javase/tutorial/collections/implementations/wrapper.html Java Tutorials - Wrapper Implementations]). Well, it all depends on what you mean by 'immutable': the wrapper only prevents the client writing into or removing elements from the collection. Provided that you don't care that - if your objects themselves are not immutable - then your client can do whatever they like to the actual elements in the collection, including setting them all to null, maybe it's great. I did not think that just being able to control changes to the membership of the collection while allowing the client's view to reflect any changes made to the original, but not actually protecting the actual members of the collection from molestation, was a good enough reason to have to make the extra provisions I thought that I would have make to accommodate this in the design. | ||

| − | + | ===Big Design Up-front?=== | |

At this point it is clear that I did quite a lot of [[Big design up front|big design up-front]], and it did make me very aware of the problems I would have encountered if I had had a client changing their mind, etc etc. However, I do feel that thinking hard about the underlying concepts (what do we want from collections, types as behaviour) was beneficial: personally I needed to do that to get from my initial very unoriginal 'same-as-last-time-but-smaller' thinking to what I saw as the key points or pivots in the design. The problem of course was that the more I thought the more complexities I could see and the more hopeless actually trying to write any code became - [http://sourcemaking.com/antipatterns/analysis-paralysis analysis-paralysis]. | At this point it is clear that I did quite a lot of [[Big design up front|big design up-front]], and it did make me very aware of the problems I would have encountered if I had had a client changing their mind, etc etc. However, I do feel that thinking hard about the underlying concepts (what do we want from collections, types as behaviour) was beneficial: personally I needed to do that to get from my initial very unoriginal 'same-as-last-time-but-smaller' thinking to what I saw as the key points or pivots in the design. The problem of course was that the more I thought the more complexities I could see and the more hopeless actually trying to write any code became - [http://sourcemaking.com/antipatterns/analysis-paralysis analysis-paralysis]. | ||

| − | + | === Initial design detail === | |

| − | For the details of my initial design see [[User:Jenny Harlow/Design study/Initial design detail|this page]]. | + | For the details of my initial design see [[User:Jenny Harlow/Design study/Initial design detail|this page]]. I have included comments on the glaring flaws in the initial design in this description. |

| − | == Design | + | == Design Improvements == |

| + | I talked through many of the design improvements as I described the [[User:Jenny Harlow/Design study/Initial design detail|initial design details]]. | ||

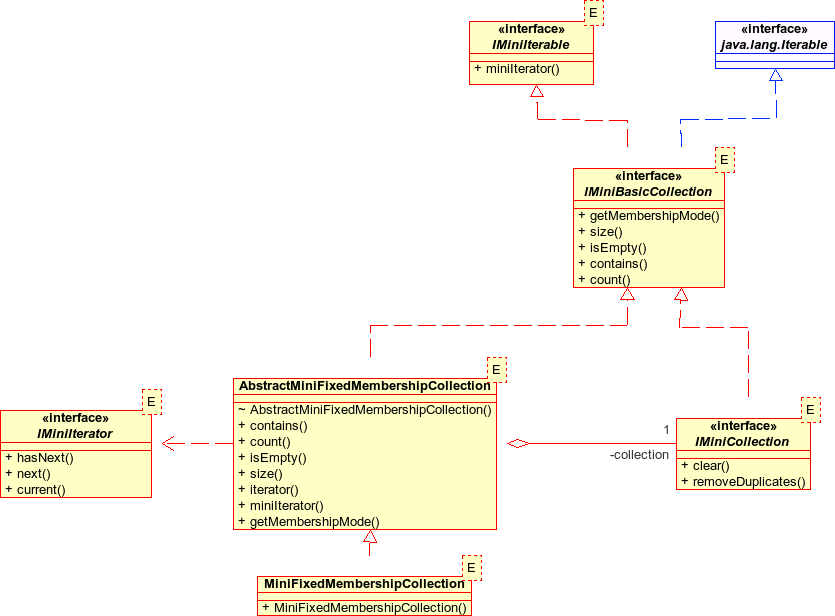

| + | One further change I made was to decide to add the '[[#Unmodifiability|unmodifiable]]' collections described above. I still question how useful these really are but it was interesting to do it, and looking at the uml diagrams for the initial design I realised that I could provide an equivalent of the Java 'unmodifiable' wrappers fairly easily. I created a type IMiniBasicCollection with the same 'non-destructive' operations as IMiniCollection and made IMiniCollection a subtype of this, ie adding the destructive behaviour. I had already move the removeCurrent() operation out of the IMiniIterator type, so IMiniBasicCollection could be iterable, and neither the type nor the iterator provides any operations to alter the membership of the collection. I preferred to call the concrete class 'fixed membership' rather than unmodifiable. This composes an IMiniCollection so that the fixed membership view reflects any changes in the backing collection. | ||

| + | [[Image:JennyFinalCollections.png|frame|center|alt="FInal toplevel collections for Jenny's design"|IMiniBasicCollection and IMiniCollection]] | ||

| + | I also made IMiniBasicCollection implement java.lang.Iterable as well as my own IMiniIterable. This was simply to be able to use the for-each construct with my collections. Clearly, it is only a matter of time before Java embrace MiniCollections and provide a for-each that uses IMiniIterator, but until then I put in this bodge. It was very interesting working out how to then make the for-each deliver the same collection elements as my own iterators. Essentially I adapted my own iterators (themselves adapted from Iterators) back to the Iterator interface (for-each expects an iterator that returns an element with each call to next() and stops when hasNext() == false; IMiniIterators do not behave like this). There is a better explanation of why this was necessary in the [[User:Jenny Harlow/Design study/Final design detail|final design details]] for bags of singles and bags of pairs. | ||

| − | + | The final design also includes the abstract factories which were implicit in the intentions of initial design but not actually in the diagrams. | |

| + | ==Final Design== | ||

| + | For the details of my final design see [[User:Jenny Harlow/Design study/Final design detail|this page]]. | ||

| + | ==Design Critique== | ||

== Files == | == Files == | ||

Revision as of 00:41, 31 August 2010

Navigation shortcuts: Wiki users:Jenny Harlow

Contents |

Project description

Designing a simple collections framework

Introduction

In COSC324 Wal emphasised the use of Collections for efficient programming. He pointed out some of the ways in which the Java Collections Framework uses design patterns (wrappers, iterators, factory methods), but also some of the glaring Arrghghgs (the OO term seems to be anti-patterns) that there seem to be in the current design. Naturally we all nodded wisely and agreed that it is just terrible ... It's easy to criticise, but just how easy is it to design a collections suite?

This project will attempt to redesign part of the Java Collections Framework from the point of view of 'if we were starting again, what might we do'. The whole framework is huge, and includes many specialised features such as the java.util.concurrent classes. This project will focus on what we might consider to be the main everyday-usage collections - the ones that come into almost everything we might want to write: lists, sets, maps - and provide only a limited set of most-needed functionality for each.

The Java Collection Framework

Design goals for the Java Collections Framework

Java wanted to have a unified Collections Framework to make programming using data structures and algorithms easier (providing useful ones in their own API, promoting interoperability between different APIs). Java say thatThe main design goal was to produce an API that was reasonably small, both in size, and, more importantly, in 'conceptual weight.' It was critical that the new functionality not seem alien to current Java programmers; it had to augment current facilities, rather than replacing them. At the same time, the new API had to be powerful enough to provide all the advantages described above. (Oracle Java SE Documentation)

A brief overview of the Java Collections Framework

See this page for a brief overview of the Java Collections Framework.

Critique of the Java Collections framework

I started my design study by thinking what I would want to change in the Java Collections Framework. See this page for my critique of the Java Collections Framework.

Design stud

Scope

Having decided that the main issue for the developers of the Java Collections Framework is the huge scope of the undertaking, I naturally wanted to avoid as much of that difficulty as I could by limiting the scope of my design project.

This project aimed to produce a collections framework suitable for small-scale non-specialist use. For example, my design should support the way that collections are commonly used as a convenient representation of relationships between objects (objects of class A can each have relationships with a group of objects of type Z, represented by class A objects having a data member which is a collection of references to Z types). Similarly, the design should support small non-performance critical data-storage-and processing applications - the proverbial video-store rentals system, for example.

I was not aiming to provide data structures for a data processing application where the collection is at the centre of the application, the volume of data to be handled is very very large, and performance is critical.

Data structures and capabilities

The design should provide a framework of basic structures with limited core functionality (design decisions: what are the 'basic structures'? what is the 'core functionality'?).

At a minimum, the design should provide the equivalent of the structures represented by the main List, Set and Map types in the Java Collections Framework. I am excluding Queue and Stack at least from my first attempt so that I could concentrate on those types, but I tried to consider the need to eventually be able to add Queue and Stack as a touchstone for how extensible the framework was.

The design did not aim to provide all of the capabilities offered in these main interfaces: as described above, the Java Framework has added functionality cheaply by making contracts optional.

The design was intended to provide an integrated framework so that data could be moved between data structures easily and there was a consistent way of invoking as much common functionality as there really is between types (design decisions: what commonality is really there?).

Contracts

A major issue for a collections framework is contracts: Collections are for other programmers to use and so using and abiding by design by contract has to be just as important, if not more so, as conspicuous display of GoF-authorised design patterns. Am I claiming the design by contract is more important here than in some of the other projects? No, but I am saying that it is very very relevant when designing a framework whose sole purpose is to provide objects to be used by clients.

Optional extras

If I have time I am interested in the idea of a performance test suite for collections, running some standard tests and providing a summary of results.

Initial Design

Overview

When I started, I was thinking along the lines of simply recreating the structure in the Collections Framework: a List, a Set, a Map, a Queue, a Stack; cutting down what I viewed as almost-duplicated operations or 'wouldn't-it-be-nice-if' optional functionality; cleaning up things like vector and stack ...

Then I started to think about types in terms of behaviour, not familiar labels. We choose data structure types that offer the behaviour we want. Performance is an implementation issue. In some circumstances it is a very important one, but nevertheless there are too many different factors that can affect actual performance for me to feel that it can be considered part of behaviour at the type level. My initial attempt to dismiss entirely any performance considerations from my design was unsuccessful, and performance is discussed further below, but I decided to start by considering data structure type behaviour.

The Java Collections Framework, and many others like the C++ STL containers, use labels like 'set' and 'map' which have a mathematical meaning, but this is simply because some aspect of the structure seems to coincide with the mathematical interpretation. A mathematical set comprises only unique objects and two mathematical sets are considered equal if they contain the same objects, irrespective of their ordering (note - this is not the same as saying that mathematical sets are 'unordered'). When we choose to use a data structure called a set, what is it that we want? Very often, we want to be able to insist that the structure only contains unique members. This could be done with an array-like underlying implementation that checks when elements are inserted (and we could define equality in terms of membership irrespective of ordering, although the calculation might have be computationally crushing for larger structures). But, if we are also happy to forgo any notion of ordering within the structure then we can exploit the faster find-time offered by a hashtable or hashmap, which can speed up both insertion and lookup. Note that not all set implementations automatically use hash structures: the C++ STL set is, implicitly, a sorted set, usually implemented with a tree. In Java you can choose a SortedSet if you want to guarantee uniqueness but also be able to have some notion of ordering. Would a user of SortedSet say that two sorted sets were equal based on ordered or unordered membership? Almost all of the time, they don't care about that (although it is central to the mathematical set concept) - what they want is something that can ensure that the elements are unique but be able to order them as well. What about a multiset? Ideal if you want to to keep the fast lookup of the underlying hashmap or hashtable but not have to have uniqueness... How does that relate to a mathematical set?

Similarly, a mathematical map is a synonym for a function, and must map to every element in a specified domain to one and only one member of a specified range. As a function, the notion of the ordering of the objects to which it is applied is irrelevant. We use data structures called maps when we want to store pair-wise relationships between objects. Sometimes, but not always, we also want to be able to guarantee that there will be only one 'value' associated with a given 'key' object. We are not defining a function to map any object of the key type to one and only one object of the 'value' type, in fact we often use maps to be able to change the values a key object is associated with. In addition, the restriction to only one association per key may be a nuisance, because we want an object to be associated with more than one other object, and we write ourself a multimap by using a set as the value a key object is associated with.

Sometimes we have particular imperative performance requirements which require that whatever else our structure does or does not do, it can meet those requirements. When this happens, the need for a particular implementation drives the choice of structure and may force us to forgo some other behaviour we might have otherwise have quite liked. For example, if we need O(1) lookup then we have to think in terms of a hashtable or hashmap, and we then have to forgo ordering or invent a way of trying to have both by having a data structure combining a sequence and a hashmap, and if we want O(1) lookup but non-unique keys we can get a multiset by associating cardinality values with keys.

My point is that we make choices on the basis of the behaviour we most want/can put up with/do not want and that this has very little to do with mathematical sets or maps.

- Sometimes we want to be able to use or apply some form of ordering: we want a sequence type where we can think of one element as before or after another.

- Sometimes we want to restrict the structure to having only one of each element (however equality is defined).

- Sometimes we want to be able to have a container where the elements are not single objects but associations between pairs.

- Sometimes we need to guarantee some aspect of the performance of the container.

- These needs are not mutually exclusive; they may coincide well, in terms of the available implementations, or we may have to compromise.

Big picture design decisions

I decided to try to create a framework based on this 'what matters is behaviour' idea and decided on a 'big picture design' as follows:

- The "basic data structures" are sequences and bags. Sequences have behaviour that reflects some notion of an ordering of the elements, bags do not.

- Bags essentially allow scope for performance improvements where sequences may not be good enough (like lookup) at the expense of ordering.

- Sequences and bags should both be capable of being restricted to unique member elements (based on whatever equality between the type of objects contained in the structure is), or of not imposing such a restriction.

- For each, I should try to stick to the core functionality needed to be able to use such a structure and distinguish between this core and the 'nice-to-have' variations on a theme.

- Sequences and bags are both subtypes of a collection supertype. This provides some integration and interoperability between them: there are some common operations and each subtype can be constructed from a different collection without having to know the subtype of that collection.

- For an association, the collection element is the ordered pair of objects:

- If an interface specifies any preconditions or conditional-caveats on behaviour, the user of the type must be able to check these without having to catch an exception.

- I decided to implement this with 'interrogation' methods rather than tag interfaces to allow dynamic changes in the object characteristics upon which the behaviour might depend.

- Every structure should support iteration.

Slightly smaller-picture design decisions

- Initially, I decided not to provide any collection subtype especially for associations: I reasoned that if I provided a pair type then users could use either sequences or bags of pairs as they wanted, using the pair methods to manipulate the objects in the association. However, my purist stance on this did not survive long:

- If part of the aim of the Collections Framework is to make things easier for programmers, stripping down too far means failing that aim: this is back to the overarching dilemma of what to provide - what is clutter and what is justified? In the end I decided that the user expectation of the equivalent of the present Map is strong enough that it should be provided.

- (A very weak reason, but I am trying to be honest here) I was interested in working out how to actually implement the integration of a nice flexible multimap into the framework.

- I therefore settled on having BagSingles and BagPairs types. I had assumed that there would be a supertype Bag but when I thought about the contract that that could offer, I decided that in terms of behaviour from the users point of view there was very little commonality even though from an implementation point of view I could see them as almost the same thing.

- I saw a restriction to unique members as added behaviour:

- A 'basic' Sequence or Bag does not restrict membership, some sort of fancy-Sequence or fancy-Bag does.

- I decided to use the Decorator pattern to provide the uniques-only versions of the Sequence, BagSingles and BagPairs types There many issues about this decision, which are discussed further under initial design detail.

- I also decided to try to provide sorted Sequences with using the Decorator pattern. This decision is also discussed further under initial design detail.

- I wanted a design that supported some flexibility about the actual implementation of the data structure:

- By using abstract classes as a middle layer between interfaces and concrete classes, I could make the framework relatively easy to extend (open closed principle).

- But I wanted to go further than this and do exactly what the intent of the Bridge pattern expresses: "decouple an abstraction from its implementation so that the two can vary independently" (Gang of Four). The bridges are discussed further under initial design detail.

- In order to be lazy and avoid having to write my own implementations I wanted to use the existing Java Collections Framework to provide implementations so as to be able to demonstrate and test my design. I used the Adapter pattern to adapt the Collections Framework interfaces to the interfaces in my design (again, see initial design detail).

The performance debate

Initially, as I have discussed, I wanted my design to stand aloof from performance issues. I viewed that as an implementation detail, and if I provided the flexibility to choose an implementation, then those who cared could use/build/beg/borrow/steal an implementation that suited their needs. Whenever data structures are discussed, performance seems to be the big talking point. I suggest that in practice, for most small-scale uses, the choice between HashSet/TreeSet/LinkedHashSet, HashMap/TreeMap or ArrayList/LinkedList will make negligible practical difference because that choice plays only a very small part in the efficiency of the overall application. If you think that performance really does matter, then then making a careful choice of data structure may be wasted if you don't consider the performance of your hash function (hashCode()) in relation to your range of keys, not to mention the effect of correct choice of capacity and load factors... Just choosing a HashSet says very little about what performance you will actually get. When performance really really matters, for large-scale data-processing-focussed applications, then many of the forum discussions seem to end up advocating writing your own hashmaps tailored to your own keys or using specialist data structure libraries. For an interesting discussion of the Java HashSet and its underlying implementation with a HashMap, see this stackoverflow thread.

Since the scope of my project was data structures was for small non-performance critical applications I stuck to this for Bags and only provided a HashTable-based implementation with no tuning operations. However I did decide to provide both a sequential access and a random access implementation for the Sequence. This was partly to test whether my design really supported the plug-and-play dynamically-changeable underlying implementations that I wanted to be able to provide and also because almost everyone I talked to regarded it as a major issue for sequences and who am I to gainsay them?

Unmodifiability

I decided not to try to provide the equivalent of the Unmodifiable wrappers in the Java Collections Framework. I thought about this for a long time. My problem is that the Unmodifiable wrappers are the chief perpetrators of evil acts of contract breaking (or - if you prefer the sophistry of - of exploiting 'optional' clauses in contracts) in the Java Collections Framework. There are better ways to do it, but is it worth it? Java say that there are two uses of the unmodifiable wrappers: "to make a collection immutable once it has been built" (make the collection, wrap, destroy the original collection); and "to allow certain clients read-only access to your data structures" - make collection, hand wrapped collection to client, "clients can look but not modify ..." (Java Tutorials - Wrapper Implementations). Well, it all depends on what you mean by 'immutable': the wrapper only prevents the client writing into or removing elements from the collection. Provided that you don't care that - if your objects themselves are not immutable - then your client can do whatever they like to the actual elements in the collection, including setting them all to null, maybe it's great. I did not think that just being able to control changes to the membership of the collection while allowing the client's view to reflect any changes made to the original, but not actually protecting the actual members of the collection from molestation, was a good enough reason to have to make the extra provisions I thought that I would have make to accommodate this in the design.

Big Design Up-front?

At this point it is clear that I did quite a lot of big design up-front, and it did make me very aware of the problems I would have encountered if I had had a client changing their mind, etc etc. However, I do feel that thinking hard about the underlying concepts (what do we want from collections, types as behaviour) was beneficial: personally I needed to do that to get from my initial very unoriginal 'same-as-last-time-but-smaller' thinking to what I saw as the key points or pivots in the design. The problem of course was that the more I thought the more complexities I could see and the more hopeless actually trying to write any code became - analysis-paralysis.

Initial design detail

For the details of my initial design see this page. I have included comments on the glaring flaws in the initial design in this description.

Design Improvements

I talked through many of the design improvements as I described the initial design details.

One further change I made was to decide to add the 'unmodifiable' collections described above. I still question how useful these really are but it was interesting to do it, and looking at the uml diagrams for the initial design I realised that I could provide an equivalent of the Java 'unmodifiable' wrappers fairly easily. I created a type IMiniBasicCollection with the same 'non-destructive' operations as IMiniCollection and made IMiniCollection a subtype of this, ie adding the destructive behaviour. I had already move the removeCurrent() operation out of the IMiniIterator type, so IMiniBasicCollection could be iterable, and neither the type nor the iterator provides any operations to alter the membership of the collection. I preferred to call the concrete class 'fixed membership' rather than unmodifiable. This composes an IMiniCollection so that the fixed membership view reflects any changes in the backing collection.

I also made IMiniBasicCollection implement java.lang.Iterable as well as my own IMiniIterable. This was simply to be able to use the for-each construct with my collections. Clearly, it is only a matter of time before Java embrace MiniCollections and provide a for-each that uses IMiniIterator, but until then I put in this bodge. It was very interesting working out how to then make the for-each deliver the same collection elements as my own iterators. Essentially I adapted my own iterators (themselves adapted from Iterators) back to the Iterator interface (for-each expects an iterator that returns an element with each call to next() and stops when hasNext() == false; IMiniIterators do not behave like this). There is a better explanation of why this was necessary in the final design details for bags of singles and bags of pairs.

The final design also includes the abstract factories which were implicit in the intentions of initial design but not actually in the diagrams.

Final Design

For the details of my final design see this page.