User:Scott Parlane/DesignStudy

m |

m (Reverted edits by Ebybymic (Talk); changed back to last version by Scott Parlane) |

||

| (15 intermediate revisions by 2 users not shown) | |||

| Line 1: | Line 1: | ||

= Project (Programming Language Parser) = | = Project (Programming Language Parser) = | ||

| + | |||

== Description == | == Description == | ||

| − | My project is a parser for LTF (Logic, Types, Flow) | + | My project is a parser for LTF (Logic, Types, Flow). |

I have a previous parser (written in C++) that can parse a language with similar logic/type declarations. However this project is mostly new, some parsing, OO, and language concepts are shared from the previous project. | I have a previous parser (written in C++) that can parse a language with similar logic/type declarations. However this project is mostly new, some parsing, OO, and language concepts are shared from the previous project. | ||

| Line 20: | Line 21: | ||

== Design == | == Design == | ||

| + | |||

| + | [[Image:Spa77-design-token-symbol.png]] | ||

| + | |||

| + | [[Image:Spa77-uml-program.png]] | ||

| + | |||

| + | |||

| + | (Notes about UML: The uml editor didn't understand std::map/vector implications of dependencies. The class set is incomplete, more classes exists, but showing them would destroy the ability to understand the idea of the design) | ||

| + | |||

Root types: | Root types: | ||

| − | * | + | * Type (a class/type in LTF, links to AST for each function) |

| − | * Program (a program, described by a flow) | + | * Program (a program, described by a flow, links to Type(s) used) |

* AST (some item in the AST, an option of some sort) | * AST (some item in the AST, an option of some sort) | ||

| − | * | + | * Symbol (a single entity in the syntax of LTF) [first diagram] |

| + | * Tokenizer (the class for cutting input files into Symbols, one at a time) [first diagram] | ||

| − | + | Important types inherieting from AST: | |

| − | * CodeAST | + | * VariableAST (provides access to a variable) |

| − | * | + | * FunctionAST (implements a function, and contains the AST for the function) |

| + | * ValueAST (a constant in the AST) [not shown] | ||

| + | * CodeAST (performs some kind of action in the AST) | ||

| + | |||

| + | Examples of types inherieting from CodeAST: | ||

| + | * NewCodeAST (creates a new instance of a type) | ||

| + | * FunctionCallAST (calls a function) [not shown] | ||

| + | |||

| + | == Implementation Notes == | ||

| + | * The system is broken up into 2 stages. First, the code is parsed into an AST. Then the AST is converted into real code. (The real code will either be C or llvm, leaving the optimization and later stages of compiling up to the C compiler, or llvm) | ||

| + | * There are 3 separate parsers. This is because there are 3 separate grammers to parse. Each of the parsers creates an instance of a Tokenizer and adds the Symbols that it supports to it. | ||

== Design Justification == | == Design Justification == | ||

| − | * Code parsing has an inherent requirement for a [[Switch statement smell]]. I have placed all of the switch statements in the parsers. This prevents the need for switch statements in classes, or any later part of the processing. | + | * Code parsing has an inherent requirement for a [[Switch statement smell]]. I have placed all of the switch statements in the parsers. This prevents the need for switch statements in classes, or any later part of the processing. Also, [[Single choice principle]] applies where, the single place of choice is the parser. This also helps to [[Eliminate case analysis]] later in the program. The Symbol class invokes this switch statement smell. |

| − | * Overriding the code generation in each submodule, and having a single function definition that generates the code helps to [[Avoid downcasting]]. | + | * Overriding the code generation in each submodule, and having a single function definition that generates the code helps to [[Avoid downcasting]]. This also helps to [[Program to the interface not the implementation]]. Also, this allows the code generator to call itself on every object it knows about (generally to implement a block of code) as in [[Recursion introduction]] |

| − | * In the parsing the code [[Avoid side effects]] gives the global module a nextToken() and currToken() function, because most of the time the current token is required again, and storing it as a local variable would not work. | + | * In the parsing the code [[Avoid side effects]] gives the global module a nextToken() and currToken() function, because most of the time the current token is required again, and storing it as a local variable would not work. Having nextToken() return anything does violate [[Keep accessors and mutators separate]] however the benefit of not requiring the call to nextToken() then currToken() is worth this. |

| − | * [[Big design up front]] is used to for this project, because the language as a whole needs to be designed before a parser can be started. | + | * [[Big design up front]] is used to for this project, because the language as a whole needs to be designed before a parser can be started. This is only the overall design however, the parser, AST storage, and code generation as designed as required throughout the project. |

* [[Don't expose mutable attributes]] is applied, all classes provide getter and setter methods as required to access their internals. Further, [[Getters and setters]] rule for good design, version 2 is used. However in some places, especially with regards to iterators for maps. | * [[Don't expose mutable attributes]] is applied, all classes provide getter and setter methods as required to access their internals. Further, [[Getters and setters]] rule for good design, version 2 is used. However in some places, especially with regards to iterators for maps. | ||

* [[Encapsulate that which varies]] is used to make all variables accesses appear the same, even though they might be via an array, or directly accessed. The same is also true for the code itself, built-in operators have the same interface as user-defined functions. Making them easy to use interchangeably. | * [[Encapsulate that which varies]] is used to make all variables accesses appear the same, even though they might be via an array, or directly accessed. The same is also true for the code itself, built-in operators have the same interface as user-defined functions. Making them easy to use interchangeably. | ||

| + | * The implementation doesn't think [[Goto considered harmful]] it just happens that goto has not yet been required. However, if goto is introduced, it will be introduced as part of a failure path. | ||

| + | * The entire design employs [[Information hiding]] so that all of the code is can be represented by a single base class, and each different type of code generates its own output, without the code generator needing to know what it does. This also helps to implement [[Tell dont ask]]. | ||

| + | * [[Keep it simple]] is applied, each entity in the syntax tree of LTF is a separate function in the parser. This makes it easy to follow the parsing path, and also to know what functions will/should be called next. The conflict with [[Big design up front]] is not an issue in this situation as the conflict is with a part of BDUF that is not being done. | ||

| + | * In the parser, the [[Law of Demeter]] is violated in many places by using the result of currToken() as an object. This is not a concern because the usage is more like the third example of code, also the class that has currToken() is actually a [[God class]] that has a [[God class]] as a parent. | ||

| + | * [[Named constants]] are used to identify tokens during parsing. (However, the actual value of the constants doesn't matter, as they are from an enum) | ||

| + | * [[Avoid concrete base classes]] and [[Stable abstractions principle]] are violated to allow for catching un-implemented functions at run time instead of compile time. All methods that need it are virtual, and are overridden with real code as implemented. Doing this means that a class can be created and tested in parts. | ||

| + | * [[Once and only once]] is violated because of the 3 separate parsers. This is hard to avoid because each parser deals with a different syntax. Mostly this occurs in the flow and logic parsers, because flow is a cut down version of logic, in the future it might be possible to implement the flow parser as a feature-subset of the logic parser, and thus use #ifdef marcos to switch between parsers. | ||

| + | * [[One responsibility rule]] and Tokenizer do not agree. Spliting Tokenizer up would make more of a mess than the current implementation, and would make some highly coupled classes. | ||

| + | * [[Separation of concerns]] is used, the Tokenizer, Parser, and Code generation are distinct. (The last 2 are even distinct steps). Also, most methods perform a single action. | ||

| + | * [[Software reuse]] happens at the code generation step, the output will either be C code, llvm or both (yet to be decided). This means the entire process of optimizing, generating assembler and turning it into a binary is reusing existing well-written software. | ||

| + | * [[You ain't gonna need it]] is not violated, even though it conflicts with [[Big design up front]] because the overall design is designed up front, and methods are added as required to implement that design. | ||

| + | * Currently, [[No Peter Pan objects]] is violated because the tokens are never deconstructed. This is intentional, as parts of them are needed elsewhere. In the future they will be tied into the AST. | ||

| + | * [[Reduce the number of arguments]] is applied to the parser, the Tokenizer class is passed around in the parsers inside of the file, token factory, and current token. | ||

| + | * [[Reduce the size of methods]] would not get along well with the parser in this program. But they are already split into the smallest size without getting confusing or having long parameter lists. | ||

| + | * The Tokenizer is a [[Builder]] of Symbols. | ||

| + | * Some nodes in the AST exhibit the [[Composite]] pattern by containing other AST objects. | ||

| + | * The [[Interpreter]] pattern is not used, at least not as it is documented here. Putting the parsing of the language into the AST classes would violate [[Keep it simple]] and [[Separation of concerns]] | ||

| + | * The [[Iterator]] pattern applies, espically to looking up existing names, and generating code output in blocks. However the iterators are actually internal to the classes, but they help with [[Keep it simple]] | ||

| + | |||

| + | == Constraints of implementation == | ||

| + | Only some of the parsing stage is implemented. It should be sufficient to show that the design as it stands works. Mostly the Type parser is operational, and the flow parser is also working. However the logic parser has not been attempted. | ||

| + | [[Media:Ltf.tar.bz2]] | ||

Latest revision as of 03:22, 25 November 2010

Contents |

Project (Programming Language Parser)

Description

My project is a parser for LTF (Logic, Types, Flow). I have a previous parser (written in C++) that can parse a language with similar logic/type declarations. However this project is mostly new, some parsing, OO, and language concepts are shared from the previous project.

Goals/Requirements

- Parse and create an AST (Abstract Syntax Tree) of the LTF.

About LTF

LTF is designed for writing code similar to the way the shell works, except with much more flexibility, as data can be pushed to any of the objects, not just in a forwards directions. Code is written in 3 seperate parts:

- Type information is the meta data describing each of the types in the system. (Like a header file)

- Logic is the code that implements the functionality of the types. (like normal code files)

- Flow creates objects and connects them together (similar to a main function)

Things to note:

- LTF supports single inherientance.

- LTF supports interfaces, on a per function basis.

- LTF is a push system, data always moves from in to out.

- input functions do not return a value, the correct solution is to input an error to the caller.

- Logic is pre-order, and bracketing is non-optional.

Design

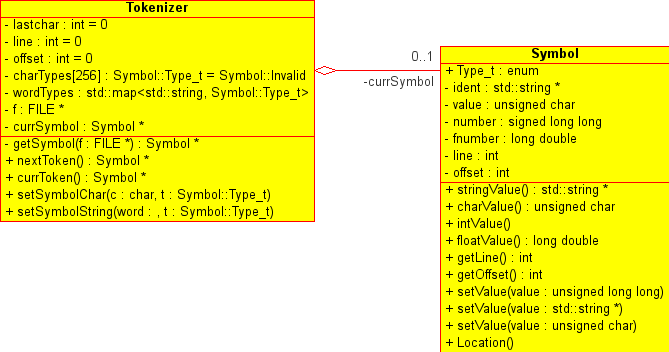

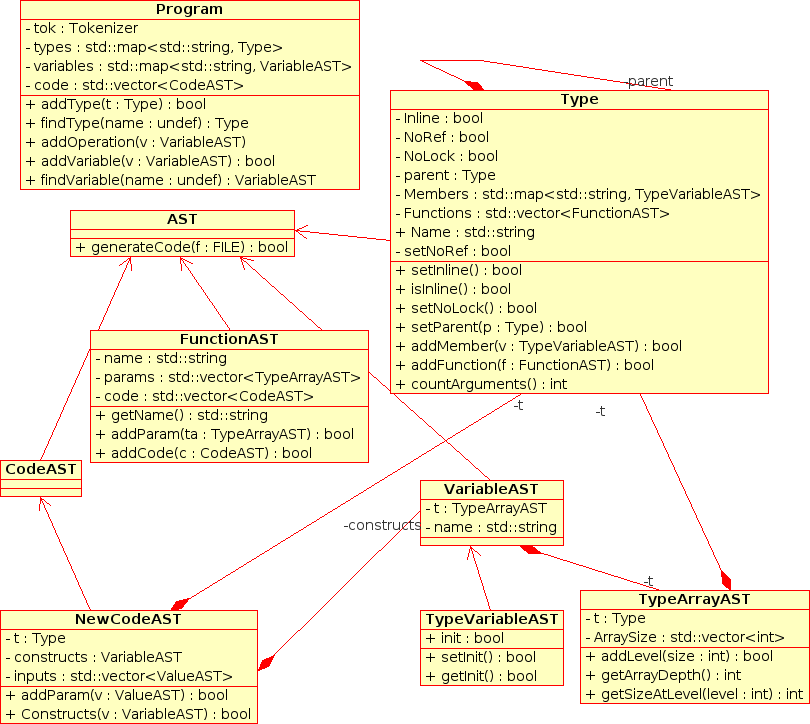

(Notes about UML: The uml editor didn't understand std::map/vector implications of dependencies. The class set is incomplete, more classes exists, but showing them would destroy the ability to understand the idea of the design)

Root types:

- Type (a class/type in LTF, links to AST for each function)

- Program (a program, described by a flow, links to Type(s) used)

- AST (some item in the AST, an option of some sort)

- Symbol (a single entity in the syntax of LTF) [first diagram]

- Tokenizer (the class for cutting input files into Symbols, one at a time) [first diagram]

Important types inherieting from AST:

- VariableAST (provides access to a variable)

- FunctionAST (implements a function, and contains the AST for the function)

- ValueAST (a constant in the AST) [not shown]

- CodeAST (performs some kind of action in the AST)

Examples of types inherieting from CodeAST:

- NewCodeAST (creates a new instance of a type)

- FunctionCallAST (calls a function) [not shown]

Implementation Notes

- The system is broken up into 2 stages. First, the code is parsed into an AST. Then the AST is converted into real code. (The real code will either be C or llvm, leaving the optimization and later stages of compiling up to the C compiler, or llvm)

- There are 3 separate parsers. This is because there are 3 separate grammers to parse. Each of the parsers creates an instance of a Tokenizer and adds the Symbols that it supports to it.

Design Justification

- Code parsing has an inherent requirement for a Switch statement smell. I have placed all of the switch statements in the parsers. This prevents the need for switch statements in classes, or any later part of the processing. Also, Single choice principle applies where, the single place of choice is the parser. This also helps to Eliminate case analysis later in the program. The Symbol class invokes this switch statement smell.

- Overriding the code generation in each submodule, and having a single function definition that generates the code helps to Avoid downcasting. This also helps to Program to the interface not the implementation. Also, this allows the code generator to call itself on every object it knows about (generally to implement a block of code) as in Recursion introduction

- In the parsing the code Avoid side effects gives the global module a nextToken() and currToken() function, because most of the time the current token is required again, and storing it as a local variable would not work. Having nextToken() return anything does violate Keep accessors and mutators separate however the benefit of not requiring the call to nextToken() then currToken() is worth this.

- Big design up front is used to for this project, because the language as a whole needs to be designed before a parser can be started. This is only the overall design however, the parser, AST storage, and code generation as designed as required throughout the project.

- Don't expose mutable attributes is applied, all classes provide getter and setter methods as required to access their internals. Further, Getters and setters rule for good design, version 2 is used. However in some places, especially with regards to iterators for maps.

- Encapsulate that which varies is used to make all variables accesses appear the same, even though they might be via an array, or directly accessed. The same is also true for the code itself, built-in operators have the same interface as user-defined functions. Making them easy to use interchangeably.

- The implementation doesn't think Goto considered harmful it just happens that goto has not yet been required. However, if goto is introduced, it will be introduced as part of a failure path.

- The entire design employs Information hiding so that all of the code is can be represented by a single base class, and each different type of code generates its own output, without the code generator needing to know what it does. This also helps to implement Tell dont ask.

- Keep it simple is applied, each entity in the syntax tree of LTF is a separate function in the parser. This makes it easy to follow the parsing path, and also to know what functions will/should be called next. The conflict with Big design up front is not an issue in this situation as the conflict is with a part of BDUF that is not being done.

- In the parser, the Law of Demeter is violated in many places by using the result of currToken() as an object. This is not a concern because the usage is more like the third example of code, also the class that has currToken() is actually a God class that has a God class as a parent.

- Named constants are used to identify tokens during parsing. (However, the actual value of the constants doesn't matter, as they are from an enum)

- Avoid concrete base classes and Stable abstractions principle are violated to allow for catching un-implemented functions at run time instead of compile time. All methods that need it are virtual, and are overridden with real code as implemented. Doing this means that a class can be created and tested in parts.

- Once and only once is violated because of the 3 separate parsers. This is hard to avoid because each parser deals with a different syntax. Mostly this occurs in the flow and logic parsers, because flow is a cut down version of logic, in the future it might be possible to implement the flow parser as a feature-subset of the logic parser, and thus use #ifdef marcos to switch between parsers.

- One responsibility rule and Tokenizer do not agree. Spliting Tokenizer up would make more of a mess than the current implementation, and would make some highly coupled classes.

- Separation of concerns is used, the Tokenizer, Parser, and Code generation are distinct. (The last 2 are even distinct steps). Also, most methods perform a single action.

- Software reuse happens at the code generation step, the output will either be C code, llvm or both (yet to be decided). This means the entire process of optimizing, generating assembler and turning it into a binary is reusing existing well-written software.

- You ain't gonna need it is not violated, even though it conflicts with Big design up front because the overall design is designed up front, and methods are added as required to implement that design.

- Currently, No Peter Pan objects is violated because the tokens are never deconstructed. This is intentional, as parts of them are needed elsewhere. In the future they will be tied into the AST.

- Reduce the number of arguments is applied to the parser, the Tokenizer class is passed around in the parsers inside of the file, token factory, and current token.

- Reduce the size of methods would not get along well with the parser in this program. But they are already split into the smallest size without getting confusing or having long parameter lists.

- The Tokenizer is a Builder of Symbols.

- Some nodes in the AST exhibit the Composite pattern by containing other AST objects.

- The Interpreter pattern is not used, at least not as it is documented here. Putting the parsing of the language into the AST classes would violate Keep it simple and Separation of concerns

- The Iterator pattern applies, espically to looking up existing names, and generating code output in blocks. However the iterators are actually internal to the classes, but they help with Keep it simple

Constraints of implementation

Only some of the parsing stage is implemented. It should be sufficient to show that the design as it stands works. Mostly the Type parser is operational, and the flow parser is also working. However the logic parser has not been attempted. Media:Ltf.tar.bz2