LukasKorsikaDesignStudy

m (Reverted edits by Ebybymic (Talk); changed back to last version by Lukas Korsika) |

|||

| (14 intermediate revisions by 2 users not shown) | |||

| Line 9: | Line 9: | ||

The input to the program is a list of files to be compared for duplication, and the output is a set of groups of identical files, with each group separated by a new line. Originally I wanted to pass in a list of paths, but this method allows me much more flexibility. Given that this program is intended to be used primarily under Linux, I can use a tool like ''find''(1) to generate the list of files I am interested in. This gives me the power to limit my comparisons to, for example, files with the .avi extension. Adding support for such advanced operation to this program would duplicate existing functionality, and violate the unix philosophy of Do One Thing, And Do It Well. (in OO terms, the [[Single responsibility principle]]). | The input to the program is a list of files to be compared for duplication, and the output is a set of groups of identical files, with each group separated by a new line. Originally I wanted to pass in a list of paths, but this method allows me much more flexibility. Given that this program is intended to be used primarily under Linux, I can use a tool like ''find''(1) to generate the list of files I am interested in. This gives me the power to limit my comparisons to, for example, files with the .avi extension. Adding support for such advanced operation to this program would duplicate existing functionality, and violate the unix philosophy of Do One Thing, And Do It Well. (in OO terms, the [[Single responsibility principle]]). | ||

| − | It is based on an existing program I wrote in C many years ago (well, about three). The goal of this exercise is to | + | It is based on an existing program I wrote in C many years ago (well, about three). The goal of this exercise is to improve the design to a level at which I am happy with it, and then implement it in C# (running under Mono). |

| + | |||

| + | For interest's sake, the original was around 1200 lines of dense C code. I really needed to improve the design, and this seemed like a good opportunity. | ||

== Requirements == | == Requirements == | ||

| Line 23: | Line 25: | ||

== Initial Design == | == Initial Design == | ||

''(converted to Object Oriented Programming from the original C source, so some liberties have been taken with converting this into classes, but this is essentially its original form)'' | ''(converted to Object Oriented Programming from the original C source, so some liberties have been taken with converting this into classes, but this is essentially its original form)'' | ||

| − | '' | + | |

| + | ''Also note that this diagram does not contain all the methods, functions, and relations. Only the ones I wanted to mention in the design criticisms'' | ||

[[image:Lko15-OldUML.png]] | [[image:Lko15-OldUML.png]] | ||

| Line 32: | Line 35: | ||

The helper classes are: | The helper classes are: | ||

* File -- This represents a file on the file system, and has methods to find its size, and its SHA-1 hash. (which are the only supported bits of data used to check for uniqueness). | * File -- This represents a file on the file system, and has methods to find its size, and its SHA-1 hash. (which are the only supported bits of data used to check for uniqueness). | ||

| − | * Tree -- This is a simple class representing a Tree. A tree is composed of a set of TreeNode, and stores a reference to the root. It also has a prune method, which uses a recursive algorithm to remove all TreeNodes with one or fewer (<= 1) files in it. | + | * Tree -- This is a simple class representing a Tree. This is basically a binary tree used to store the set of files corresponding to each value (each filesize, etc). A tree is composed of a set of TreeNode, and stores a reference to the root. It also has a prune method, which uses a recursive algorithm to remove all TreeNodes with one or fewer (<= 1) files in it. |

* TreeNode -- A tree node represents a node in a binary tree, stores its key (which may be size or hash depending on the tree), and a list of all files which have that value. TreeNode has a number of recursive methods to iterate over the tree, get the list of files at that node, and insert a new file with a key recursively. | * TreeNode -- A tree node represents a node in a binary tree, stores its key (which may be size or hash depending on the tree), and a list of all files which have that value. TreeNode has a number of recursive methods to iterate over the tree, get the list of files at that node, and insert a new file with a key recursively. | ||

| Line 39: | Line 42: | ||

Grouping is performed by calculating the relevant statistic for each file (in this version either size or hash). This is used as a key for the file when it is inserted into the tree. Note that our Tree class supports having multiple values for a single key. Then it calls the tree's prune() method, which removes all nodes with only one value. In real terms, this represents getting rid of all files with a unique size/hash. | Grouping is performed by calculating the relevant statistic for each file (in this version either size or hash). This is used as a key for the file when it is inserted into the tree. Note that our Tree class supports having multiple values for a single key. Then it calls the tree's prune() method, which removes all nodes with only one value. In real terms, this represents getting rid of all files with a unique size/hash. | ||

| − | This process is first performed for size (as it is | + | This process is first performed for size (as it is quickly obtainable). Each node now contains a set of files with the same size, which may be identical. To discover whether they are (most likely) identical we repeat the process using the hash of each file in a node. We do this on a node-by-node basis as files with different sizes are never equal. Any nodes that are left in the tree of hashes has a list of files with the same size and the same hash. These are most likely identical, and are output as a group to the standard output. |

| + | |||

| + | Note that while this design looks much simpler in UML it suffers heavily from the [[Long method smell]], and is full of violations of the [[Single responsibility principle]]. Everything on the new design is handled somewhere in the current design. | ||

=== Criticisms === | === Criticisms === | ||

''Along with the solutions'' | ''Along with the solutions'' | ||

| + | |||

| + | There are the most obvious criticisms of the design which jumped out at me. Originally I planned to iteratively improve the design, but after fixing these problems most of the other design issues went away, so I just tweaked the resulting design a little and posted it as the new version below. | ||

* Uses a [[God class]] -- many sub-issues to do with this. | * Uses a [[God class]] -- many sub-issues to do with this. | ||

| Line 48: | Line 55: | ||

* TreeNode deals with both maintaining collections of files, as well as implementing a binary tree. This violates the [[Single responsibility principle]]. | * TreeNode deals with both maintaining collections of files, as well as implementing a binary tree. This violates the [[Single responsibility principle]]. | ||

** => Remove TreeNode, and instead make a Tree class that uses a MultiMap (which we probably have to implement. *sigh*, C#) to store the files. | ** => Remove TreeNode, and instead make a Tree class that uses a MultiMap (which we probably have to implement. *sigh*, C#) to store the files. | ||

| − | * The prune method should perhaps be in TreeNode, to [[Keep related data and behavior in one place]]. | + | * The prune method (which removes nodes with only one file from the tree) should perhaps be in TreeNode rather than Tree, to [[Keep related data and behavior in one place]]. |

** => This may have to stay this way, as rolling our own MultiMap with pruning support violates [[Single responsibility principle]], and extending a MultiMap implementation would be [[Inheritance for implementation]]. Besides, given that a Tree is ComposedOf its MultiMap, this doesn't seem too bad. | ** => This may have to stay this way, as rolling our own MultiMap with pruning support violates [[Single responsibility principle]], and extending a MultiMap implementation would be [[Inheritance for implementation]]. Besides, given that a Tree is ComposedOf its MultiMap, this doesn't seem too bad. | ||

| − | * The [[God class]] shouldn't have to ask the File for its size/hash. It should instead tell the | + | * The [[God class]] shouldn't have to ask the File for its size/hash. It should instead tell the collection to insert it using whatever hashing method that collection uses [[Tell, don't ask]]. |

| − | ** => What files are being sorted by really depends on the | + | ** => What files are being sorted by really depends on the collection, so perhaps a collection should know this information. This way one can simply .Insert(File) into the tree. This could use a Classifier interface, which would be implemented by various concrete classifiers such as SizeClassifier, HashClassifier, etc, and used by the Tree to resolve a file into a sorting key. |

| + | ** In the end I decided to create a Grouper that takes one file group and returns a list of file groups for each classification value. ''(see below)'' | ||

* The tree should know what it's grouping by rather than that information being implied by the variable storing the tree (eg Tree sizeTree) [[Keep related data and behavior in one place]]. | * The tree should know what it's grouping by rather than that information being implied by the variable storing the tree (eg Tree sizeTree) [[Keep related data and behavior in one place]]. | ||

** ''(See above)'' | ** ''(See above)'' | ||

* The File class shouldn't calculate hashes [[Single responsibility principle]] | * The File class shouldn't calculate hashes [[Single responsibility principle]] | ||

| − | ** => Create a | + | ** => Create a HashAlgorithm class, which can be instantiated by the HashClassifier. Neither HashAlgorithm nor File knows about the existence of the other. As they are somewhat unrelated this keeps [[Coupling_and_cohesion#Coupling|coupling]] low, making it much easier to [[Software_reuse|reuse]] these classes. |

| − | * It should be possible to add new key types to group by without modifying existing classes. [[Open closed principle]] [[ | + | * It should be possible to add new key types to group by without modifying existing classes. This is in keeping with the [[Open closed principle]], and hints strongly at [[Beware type switches]], as that is essentially what hard-coded key types are. |

| − | ** | + | ** => The Classifier approach suggested above nicely deals with that problem as creating a new key type is as simple as creating a new concrete Classifier. |

== New Version == | == New Version == | ||

| − | ''The | + | ''The improved version of the design, taking into account the above criticisms and suggestions.'' |

| + | |||

| + | ''The implementation for this design can be found (along with a precompiled Windows executable) at https://qu4z.net/dupe.zip (Ignore the warning about a self-signed cert). It hasn't been thoroughly tested, but it works as a proof of concept.'' | ||

[[image:Lko15-R1UML.png]] | [[image:Lko15-R1UML.png]] | ||

| Line 66: | Line 76: | ||

=== Design Description === | === Design Description === | ||

| − | This new design has moved most of the functionality out of the [[God class]]. | + | This new design has moved most of the functionality out of the [[God class]]. Let's give a brief tour of the classes and their responsibilities. |

| − | * | + | |

| − | * | + | * Starting on the right-hand side we have the MultiMap interface, and its implementation, MapSetMultiMap. A MultiMap is a collection which associates a key with multiple values. That is, it is like a map, but rather than storing a single value for the key, it stores a list/set. This seemed like a useful reusable data structure to replace the horrible binary tree/linked list mutant that was the Tree/TreeNode pair. Unfortunately, C# does not have this collection built into the standard library, so I rolled my own simple one using a Map and a Set. This class has no knowledge of any other classes in the design, making it easily [[Software_reuse|reusable]]. Also, all variables of this type reference the [[Program_to_the_interface_not_the_implementation|interface, not the implementation]] (aside from the calls to new). This means the collection could easily be swapped out for another which implements the interface. |

| − | + | * Slightly further down we have the HashAlgorithm interface. This interface is also stand-alone (in that it references no other classes on the diagram). It has two implementations in this project, the MD5Algorithm and the SHA1Algorithm, but more could easily be added. In this way it is [[Open closed principle|open for extension, but closed for modification]]. C# already has a native HashAlgorith type which I've inadvertently duplicated, but at the time of design/implementation I didn't know this. Luckily my design is flexible, so a simple modification of the HashGrouper would allow us to use the native support instead (which is already used internally by my concrete hash algorithms). From this I learned that you should check for existing support before you code anything, and learning is always good. (I sorta knew already. But sometimes you forget). | |

| − | * | + | * Now, on the left we have the File class. This class represents a file on the file-system. This stores a path, and provides a simple interface to the information we need for this program. It allows us to get a data stream or the file size without needing to mess about with file permissions and FileInfo objects. So in a sense it [[Keep_related_data_and_behavior_in_one_place|keeps related data and behaviour in one place]]. It also overrides GetHashCode and Equals so that files with the same path are treated as the same. Like the other classes mentioned above, this class does not depend on any other classes in this design. |

| − | * Grouper is an abstract class which | + | * A FileGroup represents a set of files grouped by some characteristic. It stores the list of files, and a textual description of the characteristic it is grouped by. In the current version of the program, this means they store a string of the format "Size: xKB" or "Size: xKB, Hash: ab2234989797b...". This has a slight [[Data class smell]], but it does know how to output itself. Also it is [[Model the real world|modelling the real world]] in the sense that a group of files with a description is part of the domain (ie it's the format of the output), so I think this is acceptable. |

| − | * | + | * FileGroupList is a class which I was at first hesitant about creating, but it's the logical place for some of this functionality. A file list represents a set of FileGroups which between them represent all the files we suspect may not be unique. That is, we initially set up a FileGroupList with all the files passed in on the standard input. Once we can prove certain files to be unique they are removed from the FileGroupList, and any others are added to the relevant FileGroup for their attributes so far. Essentially, FileGroupList has the responsibility for tracking the "world" at any point, as well as grouping the contents of each FileGroup it contains by a grouper passed into the GroupBy method. It's also responsible for outputting the complete list. |

| + | * Grouper is an abstract class which subdivides a set of files by some attribute of that file. It is something of a [[Avoid verb classes|verb class]], but there is nowhere else sensible to put this functionality. In a sense a Grouper is actually a [[Strategy|GroupingStrategy]], but I like the shorter name. It still accurately conveys the intent of the class. The ''doGrouping'' method is actually a [[Template Method]], relying on the abstract methods ''classify'' and ''describe'' to provide the details of the grouping algorithm. | ||

| + | * SizeGrouper groups files by their size. | ||

| + | * HashGrouper groups files by some hash algorithm, which is passed in to the constructor, making HashGrouper [[Open closed principle|open for extension, but closed for modification]]. | ||

| + | * The Program class is no longer a God class, as it has relatively minimal responsibilities. It reads a file list from the input, and converts it into a FileGroupList with a single group. It then calls FileGroupList::GroupBy for each Grouper, and finally [[Tell, don't ask|tells]] the FileGroupList to output itself. | ||

| + | |||

| + | === Design Patterns === | ||

| + | |||

| + | * The [[Iterator]] pattern is used somewhat implicitly as all collections are returned as IEnumerable, which is essentially an Iterable interface. No custom iterators are created in this project, which is mostly because anything iterable is passed around in a built in collection. | ||

| + | * HashAlgorithm is essentially a [[Strategy]] for the HashGrouper, with the ''doHash'' method being the AlgorithmInterface, MD5Algorithm and SHA1Algorithm being the ConcreteStrategies, and HashGrouper being the Context. Luckily, HashAlgorithm doesn't need to access HashGrouper's internal state, which simplifies the design over some Strategies, but it's a Strategy none-the-less. | ||

| + | * Grouper is almost but not quite a [[Command]]. This is because a Grouper doesn't contain the receiver, and thus doesn't completely encapsulate the concept of an action. However, it is still a representation of an action. | ||

=== Tradeoffs === | === Tradeoffs === | ||

| + | ''And reasons why things haven't been done certain ways'' | ||

| + | |||

| + | ... ''And design maxims I disagree with'' | ||

| + | |||

| + | * Grouper doesn't [[Avoid verb classes]]. While this class could perhaps be named better, but the task it performs is important, and cannot easily be merged into any other classes, especially as Grouper is essentially an interface with multiple implementations. (technically an abstract class with multiple instantiable subclasses, but it's effectively the same). | ||

| + | * Groupers could have used a [[Strategy pattern]] instead of being a [[Template Method]], but it wouldn't make any sense, because it never needs to change on the fly, and would just have complicated the design. The HashGrouper essentially uses a [[Strategy pattern]], but that is because the HashAlgorithms already existed. Also, implementing them in subclasses of the HashGrouper would violate the [[Single responsibility principle]]. | ||

| + | * I could have made FileGroupList contain the doGrouping method, and have, for instance, SizeFileGroupList. This would have [[Avoid verb classes|avoided the verb class]] mentioned above. However, having the grouping strategy in a separate class is better [[Separation of concerns]], and it saves having to instantiate a new FileGroupList for every type of operation. | ||

| + | * The ''doGrouping'' method doesn't [[Command query separation|separate commands and queries]], but I feel it is justified as it is essentially a "Compute The Result" command, and currently I [[You ain't gonna need it|ain't gonna need]] the ability to get the result repeatedly. | ||

| + | * I haven't followed the [[Law of Demeter]] in my design, because it conflicts with my desire to avoid [[Fat interfaces]] as much as possible. | ||

| + | * All of my local state is protected, in order to make classes [[Open closed principle|open for extension, but closed for modification]]. This conflicts with Riel's [[Avoid protected data]] maxim. However, I believe [[Object Encapsulation]] is better than [[Class Encapsulation]], so using protected data is the Right Thing(tm). (At least, I find it [[Model the real world|models the real world]] better). | ||

| + | * I could have made the design make better use of [[Tell, don't ask]] by, for instance, telling Files to do more things rather than doing stuff with them in other classes, but in the interest of [[Separation of concerns]] and [[Software reuse|reusability]] I kept a [[Single responsibility principle|a single responsibility]] for each of my classes. | ||

| + | * I could have created interfaces for everything, so that I could [[Program to the interface not the implementation]], and follow the [[Dependency inversion principle]], but I [[You ain't gonna need it|ain't gonna need it]] for this design, and the design is flexible enough that adding interfaces later on shouldn't be very hard. This is made possible because my design tries to be as [[Coupling_and_cohesion#Coupling|loosely coupled]] as possible. | ||

| + | * It is a little unfortunate that the Grouper knows about (and depends on) FileGroups and Files, but other solutions using, perhaps, an [[Abstract Factory]] would be much more [[Do the simplest thing that could possibly work|complicated]]. | ||

| + | |||

| + | === Other Notes === | ||

| + | |||

| + | * All my inheritance relations follow the [[Liskov substitution principle]]. | ||

| + | * I tried to [[Model the real world]] in my design as much as possible, leading to a class for every concept a user would have. | ||

| + | * I tried to encapsulate as much as possible without overcomplicating the design. The details of file IO and file info retrieval are encapsulated within the File class. The details of hash algorithms are encapsulated in their respective classes. The collections used are split off into reusable generic classes. etc | ||

| + | * I followed the [[Bob_Martin's_principles#Principles_of_class_design|SOLID principles]] as much as possible, although some didn't factor into the design as there was no real opportunity to break them. | ||

Latest revision as of 03:08, 25 November 2010

Contents |

The Problem

The project I am designing in this study is an application to help me manage my files. I tend to have a number of copies of the same file scattered throughout my various computers and hard drives. I'm sure some of you also have this problem. The reasons for why this occurs include:

- Some partitions/disks are only accessible under Linux, so I copy files over to Windows-accessible partitions.

- I often copy videos to my laptop to watch away from my desk.

- As above, it's useful to have music on both my laptop and my desktop.

While there are clever solutions to this at the filesystem level and the like, these are far too complicated and unstable for me to use on an everyday basis. This program performs a much simpler task: It will go through my filesystem, and tell me which files are the same. I can then use this information to decide how to deal with the situation.

The input to the program is a list of files to be compared for duplication, and the output is a set of groups of identical files, with each group separated by a new line. Originally I wanted to pass in a list of paths, but this method allows me much more flexibility. Given that this program is intended to be used primarily under Linux, I can use a tool like find(1) to generate the list of files I am interested in. This gives me the power to limit my comparisons to, for example, files with the .avi extension. Adding support for such advanced operation to this program would duplicate existing functionality, and violate the unix philosophy of Do One Thing, And Do It Well. (in OO terms, the Single responsibility principle).

It is based on an existing program I wrote in C many years ago (well, about three). The goal of this exercise is to improve the design to a level at which I am happy with it, and then implement it in C# (running under Mono).

For interest's sake, the original was around 1200 lines of dense C code. I really needed to improve the design, and this seemed like a good opportunity.

Requirements

A basic description of requirements the solution must fulfill.

- Must take a list of files as input, and output groups of identical files

- Must support a variety of approaches for determining equality -- for instance, file size, hashes, modification time, and the raw file contents.

- Must use reasonable amounts of memory, I/O bandwidth, and time.

- Should be file-system agnostic (that is, it shouldn't be limited to one particular file system)

- Should be extensible, to ease implementation of possible future features. (the original does not fulfill this requirement).

Initial Design

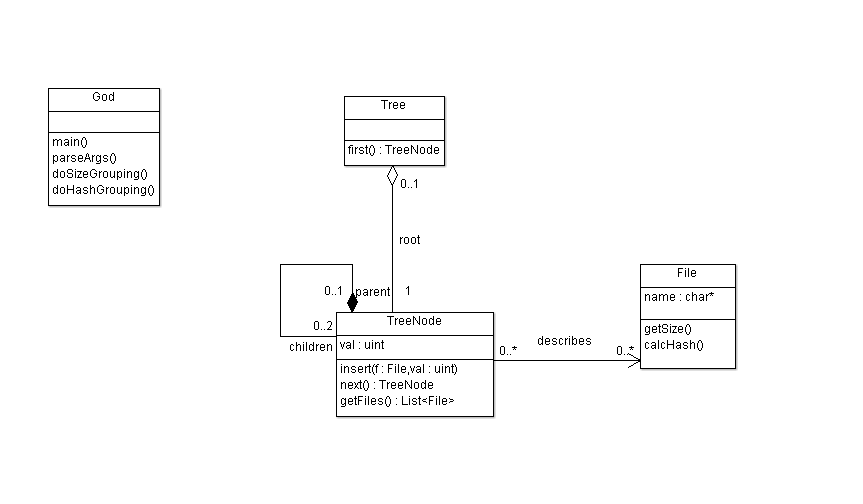

(converted to Object Oriented Programming from the original C source, so some liberties have been taken with converting this into classes, but this is essentially its original form)

Also note that this diagram does not contain all the methods, functions, and relations. Only the ones I wanted to mention in the design criticisms

Design Description

As this program was originally written in C most of the code is in a variety of functions which don't really belong to any class. That has been represented by the obvious God Class in this diagram. There are a few classes which this main program uses to perform its task. The helper classes are:

- File -- This represents a file on the file system, and has methods to find its size, and its SHA-1 hash. (which are the only supported bits of data used to check for uniqueness).

- Tree -- This is a simple class representing a Tree. This is basically a binary tree used to store the set of files corresponding to each value (each filesize, etc). A tree is composed of a set of TreeNode, and stores a reference to the root. It also has a prune method, which uses a recursive algorithm to remove all TreeNodes with one or fewer (<= 1) files in it.

- TreeNode -- A tree node represents a node in a binary tree, stores its key (which may be size or hash depending on the tree), and a list of all files which have that value. TreeNode has a number of recursive methods to iterate over the tree, get the list of files at that node, and insert a new file with a key recursively.

Most of the functionality occurs in global functions outside of classes. These are shown in the God class in the diagram. This god class handles the parsing of arguments, the reading of the file list from stdin, building the groups of identical files, and outputting the result.

Grouping is performed by calculating the relevant statistic for each file (in this version either size or hash). This is used as a key for the file when it is inserted into the tree. Note that our Tree class supports having multiple values for a single key. Then it calls the tree's prune() method, which removes all nodes with only one value. In real terms, this represents getting rid of all files with a unique size/hash.

This process is first performed for size (as it is quickly obtainable). Each node now contains a set of files with the same size, which may be identical. To discover whether they are (most likely) identical we repeat the process using the hash of each file in a node. We do this on a node-by-node basis as files with different sizes are never equal. Any nodes that are left in the tree of hashes has a list of files with the same size and the same hash. These are most likely identical, and are output as a group to the standard output.

Note that while this design looks much simpler in UML it suffers heavily from the Long method smell, and is full of violations of the Single responsibility principle. Everything on the new design is handled somewhere in the current design.

Criticisms

Along with the solutions

There are the most obvious criticisms of the design which jumped out at me. Originally I planned to iteratively improve the design, but after fixing these problems most of the other design issues went away, so I just tweaked the resulting design a little and posted it as the new version below.

- Uses a God class -- many sub-issues to do with this.

- => Separate functionality into appropriate classes.

- TreeNode deals with both maintaining collections of files, as well as implementing a binary tree. This violates the Single responsibility principle.

- => Remove TreeNode, and instead make a Tree class that uses a MultiMap (which we probably have to implement. *sigh*, C#) to store the files.

- The prune method (which removes nodes with only one file from the tree) should perhaps be in TreeNode rather than Tree, to Keep related data and behavior in one place.

- => This may have to stay this way, as rolling our own MultiMap with pruning support violates Single responsibility principle, and extending a MultiMap implementation would be Inheritance for implementation. Besides, given that a Tree is ComposedOf its MultiMap, this doesn't seem too bad.

- The God class shouldn't have to ask the File for its size/hash. It should instead tell the collection to insert it using whatever hashing method that collection uses Tell, don't ask.

- => What files are being sorted by really depends on the collection, so perhaps a collection should know this information. This way one can simply .Insert(File) into the tree. This could use a Classifier interface, which would be implemented by various concrete classifiers such as SizeClassifier, HashClassifier, etc, and used by the Tree to resolve a file into a sorting key.

- In the end I decided to create a Grouper that takes one file group and returns a list of file groups for each classification value. (see below)

- The tree should know what it's grouping by rather than that information being implied by the variable storing the tree (eg Tree sizeTree) Keep related data and behavior in one place.

- (See above)

- The File class shouldn't calculate hashes Single responsibility principle

- It should be possible to add new key types to group by without modifying existing classes. This is in keeping with the Open closed principle, and hints strongly at Beware type switches, as that is essentially what hard-coded key types are.

- => The Classifier approach suggested above nicely deals with that problem as creating a new key type is as simple as creating a new concrete Classifier.

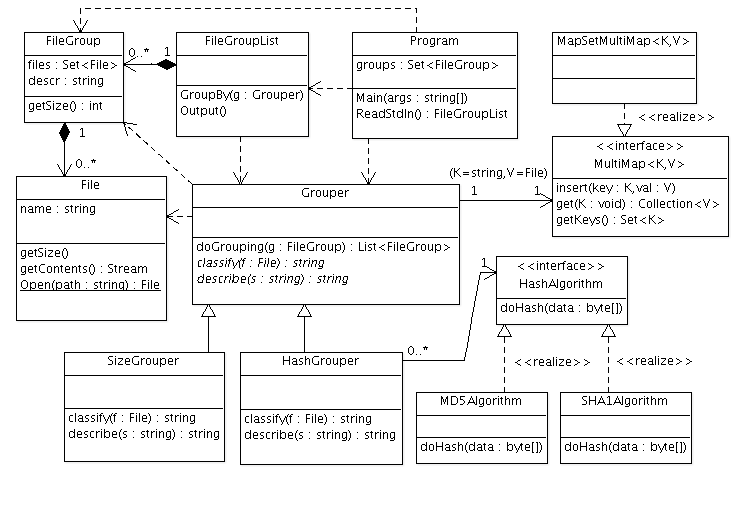

New Version

The improved version of the design, taking into account the above criticisms and suggestions.

The implementation for this design can be found (along with a precompiled Windows executable) at https://qu4z.net/dupe.zip (Ignore the warning about a self-signed cert). It hasn't been thoroughly tested, but it works as a proof of concept.

Design Description

This new design has moved most of the functionality out of the God class. Let's give a brief tour of the classes and their responsibilities.

- Starting on the right-hand side we have the MultiMap interface, and its implementation, MapSetMultiMap. A MultiMap is a collection which associates a key with multiple values. That is, it is like a map, but rather than storing a single value for the key, it stores a list/set. This seemed like a useful reusable data structure to replace the horrible binary tree/linked list mutant that was the Tree/TreeNode pair. Unfortunately, C# does not have this collection built into the standard library, so I rolled my own simple one using a Map and a Set. This class has no knowledge of any other classes in the design, making it easily reusable. Also, all variables of this type reference the interface, not the implementation (aside from the calls to new). This means the collection could easily be swapped out for another which implements the interface.

- Slightly further down we have the HashAlgorithm interface. This interface is also stand-alone (in that it references no other classes on the diagram). It has two implementations in this project, the MD5Algorithm and the SHA1Algorithm, but more could easily be added. In this way it is open for extension, but closed for modification. C# already has a native HashAlgorith type which I've inadvertently duplicated, but at the time of design/implementation I didn't know this. Luckily my design is flexible, so a simple modification of the HashGrouper would allow us to use the native support instead (which is already used internally by my concrete hash algorithms). From this I learned that you should check for existing support before you code anything, and learning is always good. (I sorta knew already. But sometimes you forget).

- Now, on the left we have the File class. This class represents a file on the file-system. This stores a path, and provides a simple interface to the information we need for this program. It allows us to get a data stream or the file size without needing to mess about with file permissions and FileInfo objects. So in a sense it keeps related data and behaviour in one place. It also overrides GetHashCode and Equals so that files with the same path are treated as the same. Like the other classes mentioned above, this class does not depend on any other classes in this design.

- A FileGroup represents a set of files grouped by some characteristic. It stores the list of files, and a textual description of the characteristic it is grouped by. In the current version of the program, this means they store a string of the format "Size: xKB" or "Size: xKB, Hash: ab2234989797b...". This has a slight Data class smell, but it does know how to output itself. Also it is modelling the real world in the sense that a group of files with a description is part of the domain (ie it's the format of the output), so I think this is acceptable.

- FileGroupList is a class which I was at first hesitant about creating, but it's the logical place for some of this functionality. A file list represents a set of FileGroups which between them represent all the files we suspect may not be unique. That is, we initially set up a FileGroupList with all the files passed in on the standard input. Once we can prove certain files to be unique they are removed from the FileGroupList, and any others are added to the relevant FileGroup for their attributes so far. Essentially, FileGroupList has the responsibility for tracking the "world" at any point, as well as grouping the contents of each FileGroup it contains by a grouper passed into the GroupBy method. It's also responsible for outputting the complete list.

- Grouper is an abstract class which subdivides a set of files by some attribute of that file. It is something of a verb class, but there is nowhere else sensible to put this functionality. In a sense a Grouper is actually a GroupingStrategy, but I like the shorter name. It still accurately conveys the intent of the class. The doGrouping method is actually a Template Method, relying on the abstract methods classify and describe to provide the details of the grouping algorithm.

- SizeGrouper groups files by their size.

- HashGrouper groups files by some hash algorithm, which is passed in to the constructor, making HashGrouper open for extension, but closed for modification.

- The Program class is no longer a God class, as it has relatively minimal responsibilities. It reads a file list from the input, and converts it into a FileGroupList with a single group. It then calls FileGroupList::GroupBy for each Grouper, and finally tells the FileGroupList to output itself.

Design Patterns

- The Iterator pattern is used somewhat implicitly as all collections are returned as IEnumerable, which is essentially an Iterable interface. No custom iterators are created in this project, which is mostly because anything iterable is passed around in a built in collection.

- HashAlgorithm is essentially a Strategy for the HashGrouper, with the doHash method being the AlgorithmInterface, MD5Algorithm and SHA1Algorithm being the ConcreteStrategies, and HashGrouper being the Context. Luckily, HashAlgorithm doesn't need to access HashGrouper's internal state, which simplifies the design over some Strategies, but it's a Strategy none-the-less.

- Grouper is almost but not quite a Command. This is because a Grouper doesn't contain the receiver, and thus doesn't completely encapsulate the concept of an action. However, it is still a representation of an action.

Tradeoffs

And reasons why things haven't been done certain ways

... And design maxims I disagree with

- Grouper doesn't Avoid verb classes. While this class could perhaps be named better, but the task it performs is important, and cannot easily be merged into any other classes, especially as Grouper is essentially an interface with multiple implementations. (technically an abstract class with multiple instantiable subclasses, but it's effectively the same).

- Groupers could have used a Strategy pattern instead of being a Template Method, but it wouldn't make any sense, because it never needs to change on the fly, and would just have complicated the design. The HashGrouper essentially uses a Strategy pattern, but that is because the HashAlgorithms already existed. Also, implementing them in subclasses of the HashGrouper would violate the Single responsibility principle.

- I could have made FileGroupList contain the doGrouping method, and have, for instance, SizeFileGroupList. This would have avoided the verb class mentioned above. However, having the grouping strategy in a separate class is better Separation of concerns, and it saves having to instantiate a new FileGroupList for every type of operation.

- The doGrouping method doesn't separate commands and queries, but I feel it is justified as it is essentially a "Compute The Result" command, and currently I ain't gonna need the ability to get the result repeatedly.

- I haven't followed the Law of Demeter in my design, because it conflicts with my desire to avoid Fat interfaces as much as possible.

- All of my local state is protected, in order to make classes open for extension, but closed for modification. This conflicts with Riel's Avoid protected data maxim. However, I believe Object Encapsulation is better than Class Encapsulation, so using protected data is the Right Thing(tm). (At least, I find it models the real world better).

- I could have made the design make better use of Tell, don't ask by, for instance, telling Files to do more things rather than doing stuff with them in other classes, but in the interest of Separation of concerns and reusability I kept a a single responsibility for each of my classes.

- I could have created interfaces for everything, so that I could Program to the interface not the implementation, and follow the Dependency inversion principle, but I ain't gonna need it for this design, and the design is flexible enough that adding interfaces later on shouldn't be very hard. This is made possible because my design tries to be as loosely coupled as possible.

- It is a little unfortunate that the Grouper knows about (and depends on) FileGroups and Files, but other solutions using, perhaps, an Abstract Factory would be much more complicated.

Other Notes

- All my inheritance relations follow the Liskov substitution principle.

- I tried to Model the real world in my design as much as possible, leading to a class for every concept a user would have.

- I tried to encapsulate as much as possible without overcomplicating the design. The details of file IO and file info retrieval are encapsulated within the File class. The details of hash algorithms are encapsulated in their respective classes. The collections used are split off into reusable generic classes. etc

- I followed the SOLID principles as much as possible, although some didn't factor into the design as there was no real opportunity to break them.